It is a sad commentary on our profession that we have had to adopt the phrase "Normalization of Deviance" as our own. It refers to when pilots deviate from Standard Operating Procedures so often that their SOP-less practice becomes the new norm. It seems that with each couple of passing years we have a new poster child in the case history books and right now that prime example belongs to Gulfstream IV N121JM, which crashed attempting to take off from Hanscom Field, Bedford, Massachusetts with its gust lock engaged.

— James Albright

Updated:

2016-10-01

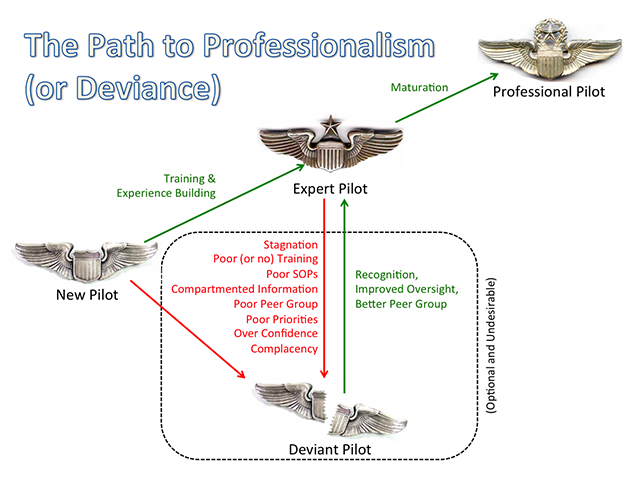

There are two routes to normalizing deviance with pilots.

- First, you can start your career free of standardization and miss the all-important introduction to SOPs. General aviation pilots who skip the military or major airlines are particularly at risk. If you've never flown for a large organization where failing to "march in step" will jeopardize your pay check, you will have to work extra hard to avoid this trap.

- Second, you could make it to a high level of expertise but then succumb to the complacency fed by your successful experiences, you could be beaten by the ever-present need to accomplish objectives (save time, save money, achieve schedules), or you might rebel against unrealistic SOPs and burdensome regulations.

Either way, you are living on borrowed time.

3 — Objective-based normalization of deviance

4 — Ego-driven normalization of deviance

5 — Experience-based normalization of deviance

1

The academic definition

The term "normalization of deviance" was coined by sociology professor Diane Vaughn in her 1996 book, The Challenger Launch Decision: Risky Technology, Culture, and Deviance at NASA, where she examines the tragedy of the 1986 launch of Space Shuttle Challenger. It is a useful term for us in the business of flying airplanes, but her definition (which follows) can be a bit ponderous. I've included what I could extract from the book because it is worth considering:

- Deviance refers to behavior that violates the norms of some group. No behavior is inherently deviant; rather it becomes so in relation to particular norms.

- Deviance is socially defined: to a large extent, it depends on some questionable activity or quality being noticed by others, who react to it by publicly labeling it as deviant.

- [In the case of the Space Shuttle Challenger incident], actions that outsiders defined as deviant after the tragedy were not defined as deviant by insiders at the time the actions took place.

Source: Vaughn, pg. 58.

- Individuals assess risk as they assess everything else—through the filtering lens of individual world view.

- The diverse experiences, assumptions, and expectations associated with different positions can manifest themselves in distinctive world views that often result in quite disparate assessments of the same thing. When risk is no longer an immediately knowable attribute of the object and the possible harm associated with it depends on other, less knowable factors, we move into the realm of uncertainty and probabilities.

- As experience accumulates, the formal assessment of risk will vary with changes in the technology, changes in knowledge about its operation, or comparison with other similar technologies.

- Implied in the term "expert" is some technical skill, gained either by experience, by professional training, or by both, that differentiates the professional from the lay assessment of risk. Also implied is that professionalism will somehow result in a more "objective" assessment than that of the amateur.

- But professional training is not a control against the imposition of particularistic world views on the interpretation of information. To the contrary, the consequence of professional training and experience is itself a particularistic world view, comprising certain assumptions, expectations, and experiences that become integrated with the person's sense of the world. The result is that highly trained individuals, their scientific and bureaucratic procedures giving them false confidence in their own objectivity, can have their interpretation of information framed in subtle, powerful, and often unacknowledged ways.

Source: Vaughn, pg. 58.

- [In the case of the space shuttle], the work group normalized the deviant performance of the SRB [Solid Rocket Booster] joint[s between sections]. By "normalized," I mean the behavior the work group first identified as technical deviation was subsequently reinterpreted as within the norm for acceptable joint performance, then finally officially labeled an acceptable risk. They redefined evidence that deviated from an acceptable standard so that it became the standard.

- The culture of production included norms and beliefs originating in the aerospace industry, the engineering profession, and the NASA organization, then uniquely expressed in the culture of Marshall Space Flight Center. It legitimated work group decision making, which was acceptable and non-deviant in that context.

- The second factor reinforcing work group decision making was structural, and the structure of regulatory relations perpetuated the normalization of deviance.

Source: Vaughn, pg. 65.

2

The aviator's definition

You won't find this anywhere, I just made it up . . .

The normalization of deviance is the incremental change to standards we once thought inviolate, turning actions once thought to be unacceptable into the new norm. The path to normalizing deviance can be paved by the lack of proper training, an experience-based ego, or expertise-based over-confidence. The ease at which one slides into the normalization of deviance can be facilitated by "group think," the lack of oversight, and a poor peer group.

3

Objective-based normalization of deviance

The primary way a highly professional pilot can be trapped into the normalization of deviance is to be ensnared by the pressure of having to achieve hard-to-achieve objectives. These objectives are usually placed on highly experienced experts, who can talk themselves into believing any normalization of deviance is justified.

While the 1986 explosion after takeoff of the Space Shuttle Challenger was the defining moment for the concept of the normalization of deviance, there were doubtless many examples before and after. Incredibly, Challenger was not the only space shuttle to fall victim to the normalization of deviance.

Space Shuttle Challenger

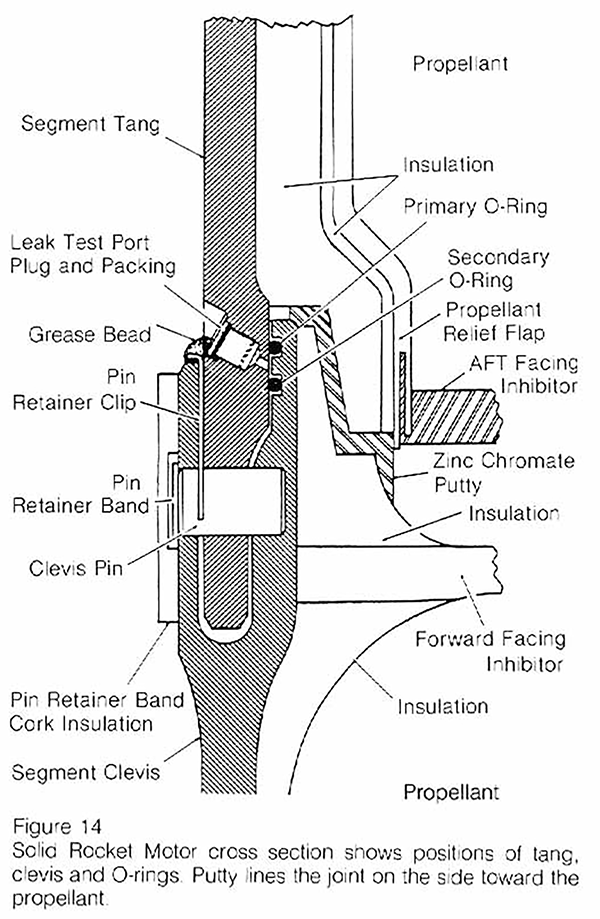

The Solid Rocket Boosters (SRBs) on the space shuttle were built by Morton Thiokol, who was quite literally the cheapest bidder. Each booster was 149' long and 12' in diameter, was manufactured in six sections, and delivered to NASA in sets of two which were joined at the factory. The three combined sections were joined in the field. Each joint kept hot propellant gases on the inside with the help of two rubberlike O-rings and an asbestos-filled putty. The 1/4" diameter O-rings surrounded the rocket's entire 12' diameter. The secondary O-ring was meant to be redundant, a safety measure. But early on in the program there was evidence of some "blow-by" beyond the primary O-ring. Engineers determined an "acceptable" amount of erosion in the O-ring and for a while these norms held up. Starting in 1984 the amount of damage to the primary O-ring was increasing. Engineers were alarmed but were later convinced the damage was slight enough and the time of exposure was short enough that the risk was acceptable. In 1985 some of the SRBs returned with unprecedented damage, the majority came back with damage, and in one case the secondary O-ring was also damaged. For one launch, there was complete burn through of a primary O-ring. In each case, the decision was to increase the amount damage deemed acceptable and press on. When it was no longer possible to say the two O-rings were redundant, NASA decided to waive the requirement.

What also happened in 1985 was a series of launch decisions in colder and colder temperatures. While the overall shuttle program was designed with a temperature range of 31°F to 99°F as a launch criteria, the SRBs were never tested at lower temperatures. In fact, Thiokol stated that O-ring temperatures must be at least 53°F at launch, or they would become brittle and would allow "extreme blow-by" of the gases. There was also evidence that the O-rings could become cold soaked and their temperatures would take time to recover from prolonged cold. But top level NASA managers were unaware of the SRB design limitations and the 53°F threshold didn't hold firm. For one launch the engineers said "Condition is not desirable but is acceptable."

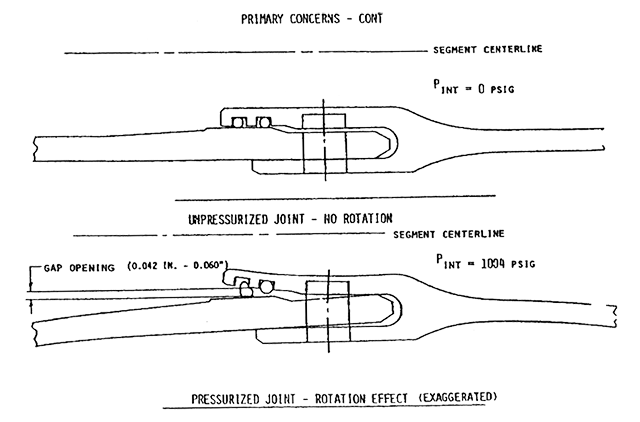

Pressurized joint-rotation effect (exaggerated), from NASA Teleconference, figure 11.5.

The temperature issue was made more complicated because there had been one launch were there was O-ring blow-by at 75°F, "indicating that temperature was not a discriminator." Of course this is ridiculous, temperature can be one of several discriminators. After the fact investigators realized that "rotation" of the SRB could decrease O-ring contact with the joint. Rotation not in the sense of one section of the SRB rotating on its circumference against the next section, but of one section rotating longitudinally. For lack of a better term, it wobbles from side to side and creates a gap, as shown above in figure 11.5. What would cause such a wobble? Perhaps it was shifting winds along the craft's upward path. There was indeed such a windshear the morning of Challenger's launch. Nonetheless, this gave the proponents of launching the avenue to weaken the argument against those arguing for delay.

Temperatures on the morning of the launch were well below 53°F. Thiokol engineers recommended delaying the launch but NASA managers applied pressure on Thiokol management, who were unable to convince their engineers to budge. So they elected to make it a "management decision" without the engineers and agreed to the launch. It was 36°F at the moment of launch. The O-rings on one of the field joints failed almost immediately but the leak was plugged by charred matter from the propellant. About a minute after launch a continuous, well-defined plume from the joint cut into the struts holding the SRB to the main tank and the SRB swivelled free. The flame breached the main tank five seconds later which erupted into a ball of flame seconds later. The shuttle cabin remained intact until impact with the ocean, killing all on board.

Much of the reporting after the event focused on the O-rings. After the accident report was published, the focus turned to a NASA managers breaking rules under the pressure of an overly aggressive launch schedule. But, as Professor Vaughn points out, they weren't breaking any rules at all. In fact, they were following the rules that allowed launch criteria and other rules to be waived. The amount of acceptable primary O-ring damage went incrementally from none, to a little, to complete burn through. Over the years the practice of reducing safety measures with waivers had become normalized.

More about this: Report to the Presidential Commission on the Space Shuttle Challenger Accident.

Space Shuttle Columbia

Seventeen years later, on February 1, 2003, the Space Shuttle Columbia was lost as it reentered the earth's atmosphere. A piece of insulating foam from the external tank broke away and struck the left wing, damaging a heat tile. When Columbia re-entered the earth's atmosphere, the damage allowed hot atmospheric gases to penetrate and destroy the internal wing structure which eventually caused the shuttle to become unstable and break apart, killing all on board. Like the earlier incident, NASA failed to pay heed to engineering concerns about previous incidents of tile damage from separating foam.

4

Ego-driven normalization of deviance

Experts who have achieved lofty career objectives but do not have sufficient oversight can normalize deviance because they can, and not get caught. The self-inflicted running aground of the Cruise Ship Costa Concordia is a case in point.

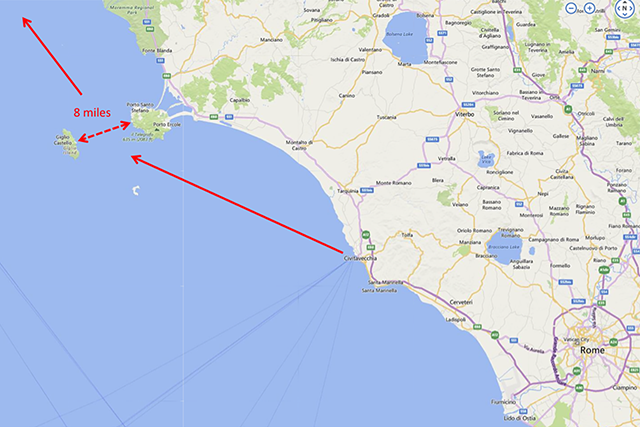

On January 13, 2012, the cruise ship Costa Concordia departed Porto Civitavecchia, near Rome, Italy, to the north. Its route took it through the eight-mile stretch between Giglio Island and the Italian mainland. Captain Francesco Schettino ordered the ship to sail closer to Giglio as a "salute" to the islanders. This was apparently the normal practice for many cruise ship captains when transiting this straight. He came too close and the left side of the ship hit the rocks, gashing the hull and sending water flooding into the ship's engine room. The ship lost power as a result and started listing to the left side, where the 174-foot gash was. Winds pushed the ship back to the island and the water in the hull caused the ship to reverse its list to the right. Meanwhile, it took an hour to begin the evacuation. Of the 3,206 passengers and 1,023 crew, all but 32 were rescued. Thirty bodies were found, two remain missing, presumed dead.

Captain Schettino was sentenced to prison for 16 years but Chief Prosecutor Beniamino Deidda wanted to elevate the blame: "For the moment, attention is generally concentrated on the responsibility of the captain, who showed himself to be tragically inadequate. But who chooses the captain?" He also noted that the crew didn't know what to do and were ill prepared for crisis management.

What goes unremarked in most of the reporting of this incident is that Captain Schettino was making this illegal and reckless "salute" in front of thousands of witnesses. If his behavior was so egregious, why had no one reported it before? It seems the entire company had succumbed to this form of deviance. The deviance had become the new norm.

More about this: "Costa Concordia: How the Disaster Unfolded," sky News, Monday 04 July 2016, http://news.sky.com/story/costa-concordia-how-the-disaster-unfolded-10371938.

5

Experience-based normalization of deviance

Experts who have accumulated an enviable amount of experience and a strong sense of confidence are at risk of normalizing deviance unless they have sufficient oversight and a strong peer group. The crash of Gulfstream IV N121JM makes this case.

Gulfstream IV N121JM Wreckage, from NTSB Accident Docket, ERA14MA271, figure 10.

On May 31, 2014, the crew of Gulfstream IV N121JM started their engines without running the engine start checklist and neglected one of the steps which would have them disengage the flight control gust lock. They then skipped the after starting engines checklist which would have required the flight controls to be checked; had they done this, they would have realized the flight controls were locked. They also skipped the taxi and line up checklists, as well as the requirement to check the elevator's freedom of movement at 60 knots. They were unable to set takeoff thrust, realized this, and continued the takeoff. The rest, unfortunately, is history.

As the news gradually leaked out from the NTSB accident investigation, we in the aviation world were stunned. How could two pilots had been so inept? But their airplane was outfitted with a Quality Assurance Recorder and we learned that this type of behavior was the norm for them. For example, the recorder revealed that they had skipped the flight control check on 98% of their previous 175 takeoffs.

These two pilots did not fly in a vacuum. They occasionally flew with contract pilots who witnessed their habitual procedural non-compliance. By tolerating their deviance, these contract crews played the roles of enablers. That served to reinforce the behavior as normal.

More about this: Gulfstream IV N121JM

6

Avoiding and curing the normalization of deviance

At each stage of a new pilot's growth comes a time where he or she is tempted to think, "At last I know what I need to know." Some pilots may even arrive at the "At last I know everything there is to know" stage. With each new level of license and training the concept of You Don't Know What You Don't Know should become reinforced. The new pilot, if not careful, may end up in the "deviant pilot" class without having ever accomplished any level of expertise.

A new pilot who does not continue his or her education is at risk of stagnating into comfortable routines that do not take advantage of lessons learned by others. Poor training can be even worse than no training if you are being taught the wrong things. In either case, the pilot becomes too embarrassed to expose him or herself to further training. This is an easy trap to fall into when flying as an amateur because there is little or not oversight, outside of a biennial review. When exposed to SOPs, these pilots are likely to react negatively. "I've been doing fine without them!"

An expert's path to deviance is different from a new pilot; the expert should know better! The expert becomes so after significant professional training, significant and meaningful experience, and often from peer recognition. These factors all solidify in the expert's mind a technical basis for deviance; they have considered all the factors and have made an expert's determination that deviance is not only acceptable, but is necessary for the good of the mission. In fact, the expert can rationalize that the deviance is actually safer.

With each pilot there are likely to be a combination of issues leading toward the normalization of deviance. These issues form a disease which impact the pilot's professional health; fortunately, for each issue there are known cures.

Issue: Stagnation

Flying can be an expensive hobby and at the point the money runs out amateurs find themselves with no future dreams to chase. For professionals there may also come a time when there are no further ratings to achieve and the prospects of a new career chapter seems unlikely. In either case, pilots risk a mental stagnation that weakens any motivation to keep in the books or keep up with the latest and greatest techniques and procedures.

- Stagnation Cures (self-administered) — The best way to cure pilot stagnation is to realize just how challenging and exciting the profession really is and to always have a goal in sight. Even those pilots without the next rating or airplane on the horizon can motivate themselves to teach others, to write, to speak, or to mentor the next generation of pilots.

- Stagnation Cures (administered by leadership) — If the schedule becomes routine or one year starts to look like the next, crews can become comfortable and complacent. It may be time to change up training (covered next) or rotate duties amongst pilots. If, as the senior pilot, you have added responsibilities on behalf of upper management, it may be a good time to delegate other duties to challenge those who become bored.

(This is one of the driving forces behind Code7700.com.)

Issue: Poor Training

Your training is only as good as the instructor and if you are taught to cut corners and to ignore all that has been learned over the years, you can be trained to deviate. This most often occurs when someone you respect or someone in a position of authority assumes the role of instructor but has already given into deviant behavior. It can also occur when a professional training vendor has misguided ideas of what should and should not be taught, or does not exercise proper oversight of its instructors. Most professional pilots will agree that recurrent training is an absolute must when dealing with technologically complex systems in a dynamic environment; but how often?

- Poor (or no) Training Cures (self-administered) — The right answer to the "How often?" question depends on your "total" experience level, your experience in type, and your experience with the operation in question. The less experience you have in any of these areas should suggest more frequent recurrent training. If you aren't getting enough training you should let your organization's leadership know. If money or schedule are tight, the answer could be an emphatic "no." But not all training has to be formalized and provided by a vendor. There are many on-line courses. You can also organize local training taught by local pilots.

- Poor (or no) Training Cures (administered by leadership) — Training administrators should realize that some training courses are merely square fillers that satisfy regulatory requirements but do not teach meaningful information. Others can become repetitive because most vendors seldom change their courses from year to year. In either case, these courses will become boring and may actually become counter productive. Administrators should seek honest feedback about each course and attempt to find alternate vendors, even for the good courses. A robust in-house training program can supplement these efforts and also serve to combat stagnation and complacency.

Issue: Poor SOPs

We often find ourselves having to adjust, reorder, or even skip some standard operating procedures because they don't exactly fit the situation at hand, they would take more time than a widely accepted short cut, or we think we have a better method. There are several problems, of course. Operating ad hoc, in the heat of the moment, we risk not carefully considering all possible factors. If we skip or reorder steps, we risk forgetting something important or failing to consider any sequential priorities. If we adjust an SOP on our own, crew resource management becomes more difficult as other have to guess about your procedures and techniques. Once we've violated the first SOP, it becomes easier to violate the second, and the third. Before too long the culture of having SOPs will erode and when that happens, all SOPs become optional. In a small flight department, there is a low likelihood of "being caught" or challenged.

- Poor SOPs Cure (self-administered) — Any pilot who is tempted to deviate from an SOP should first think about measures to formally change the SOP. There is a definite art to this. You need to carefully analyze the existing SOP, try to understand why the SOP is constructed as it is, come up with an alternative solution, gather support from peers, and advocate the change to those who have the power to change things.

- Poor SOPs Cure (administered by leadership) — Flight department leaders should work with crews to ensure that each SOP is pertinent, easily understood, easily followed, and consistent with other SOPs in the flight department and aircraft fleet. If adjustments are needed, select a well respected team member to spear head the effort, institute a test phase, and obtain manufacturer comments if possible.

Issue: Compartmented Information

Pilots tend to compartmentalize information for a variety of reasons. On a personal level, keeping a particular airplane's procedures separate from others, for example, can help keep cockpit procedures straight. We might want to insulate upper management from the nuts and bolts of what we do, reasoning that it is either too complicated to explain or uninteresting to non-practitioners. Some larger flight departments segregate fixed wing from rotary wing, Part 91 from Part 135, domestic versus international, or any number of other artificial distinctions. Some pilots may even wish to keep things quiet for fear that their ignorance on some obscure topic could be revealed. Regardless of motivation, these efforts at compartmentalization give pilots a de facto secrecy. Pilots are much like engineers in this respect. The Space Shuttle Challenger incident provides a good case study where this kind of "structural secrecy" can lead to a normalization of deviance.

By structural secrecy, I refer to the way that patterns of information, organizational structure, processes, and transactions, and the structure of regulatory relations systematically undermine the attempt to know and interpret situations in all organizations.

Source: Vaughn, pg. 238

The 31°F-99°F ambient temperature requirement for launching the entire shuttle system was created early in the program by other engineers. In a palpable demonstration of structural secrecy, many teleconference participants had jobs that did not require them to know Cape launch requirements and so were unaware that this temperature specification existed. [ . . . ] [Launch decision makers] did not know that Thiokol had not tested the boosters to the 31°F lower limit.

Source: Vaughn, pg. 391

In the case of Space Shuttle Challenger, the entire organization was steeped in the idea that "anomalies" were dealt with and only the worst problems were channeled to top decision makers. The SRB anomalies were for engineers to deal with and upper level decision makers were never informed. Lower management levels had accepted an increasing level of deviance that upper levels may not have allowed.

A flight department can suffer the same ill effects of this de facto secrecy. Pilots could make decisions based on incomplete information or a misunderstanding of organizational goals.

- Compartmented Information Cure (self-administered) — We should learn to explain the complexities and intricacies of what we do in terms senior management can digest. If done well, they will be interested and become engaged. This pays dividends when it comes time to request training and dealing with personnel issues. We should also include the entire flight department when dealing with pilot issues at every level. A domestic-only pilot, for example, is bound to be interested in international operations and will be a willing participant. This adds the extra benefit of forcing the international pilot of having to think through the issues in order to explain them clearly.

- Compartmented Information Cure (administered by leadership) — The best way to encourage a free flow of information laterally between crews and upward from crews to management is to provide the same level of communication downward from management. Monthly meetings seem to work best, but meetings should be held at least quarterly. Think of these meetings as planned entertainment, they need to be interesting and informative if you hope to encourage the open exchange of ideas. Even if you can't include everyone because of schedule constraints, a one-page summary of the meeting can keep everyone involved.

Issue: Poor Peer Group

Of course no professional pilot sets out to bend the rules on the margins or flagrantly disobey all SOPs; but many end up doing just that. Good pilots can be corrupted by poor peer groups. If everyone else has already normalized deviant behavior, it will seem an impossible task to hold true to SOPs without upsetting the status quo.

- Poor Peer Group Cure (self-administered) — There are several options, you should consider each or a combination.

- Lead by example — Use SOPs and best practices whenever you can and when asked, provide a compelling case for the SOP. In one of my Challenger flight departments pilots did not do a navigation accuracy check prior to oceanic coast out. I always did one and when asked, demonstrated how such a precaution could save you prior to your first waypoint if there was a navigation system problem or waypoint insertion error. That pilot adopted the procedure and before long it became a flight department SOP.

- The Socratic Method — Greek philosopher Socrates taught through the use of probing questions, you can apply this in most flying situations. If a senior member of the flight department insists on a non-standard procedure, ask for the reasons behind the procedure "to better understand how to accomplish the procedure." Having to verbalize the rationale may force a reexamination of the entire thought process. When I first showed up in a GIV flight department the senior pilots did not use any type of verification method prior to executing a change to FMS programing. I asked how this method would prevent an entry error that could misdirect the aircraft. After some thought, they agreed they had no such verification and were open a new technique.

- Diplomatic demonstration — If it is possible to demonstrate the efficacy of an SOP against a deviation, attempt to convince your peers to participate. Years ago, as the newest pilot in a GIV flight department, I was alarmed that the pilots flowed the after engine start checklist (Do-Verify) and often missed critical steps. I convinced them to put their method against the required method (Challenge-Do-Verify) and a stop watch. We discovered that not only was CDV more accurate than DV, it was faster.

- Group Meeting — It could very well be that a majority of pilots in your flight department have the same issues with some nonstandard procedures and a group meeting to discuss the issues can solve the problem. You should obtain leadership buy in first. Leadership may be surprised about the issue; you might be surprised how open to change they can be.

- Outside Oversight — It is easy to fall into nonstandard behavior without an occasional look from someone outside the flight department. If the entire organization normalizes deviance at about the same rate, no one will notice because they are all involved. It may be beneficial to request an outside look at the inside of your flight department.

- Peer Pressure — Once you have at least two members of the flight department willing to crusade for stricter adherence to SOPs, you have what you need to apply positive peer pressure to the rest of the organization. You may be surprised that a few voices speaking as one can have powerful results. Most pilots will be alarmed to find they have become part of the problem and will welcome a wake up call.

- Poor Peer Group Cure (administered by leadership) — As a flight department leader, all of the tools available to individuals are also available to you. But you have the added benefit of knowing your actions will carry more weight. It may be very difficult to measure the health of a flight department's peer group, especially that of a small flight department. You might be able to discern problems by asking for an outside audit or a Line Operations Observation. If you have any personnel turnover you should encourage frank feedback from exit interviews.

More about this: Navigation Accuracy Check.

More about this: Checklist Philosophy.

More about this: Line Operation Observations.

More about: Line Operations Observation.

Issue: Poor Priorities (Mission Objectives Over Safety)

One of the profound lessons of the Space Shuttle Challenger tragedy is that decision makers believed they were making the right, reasoned decision each step of the way, though in hindsight would have to agree many of these decisions were foolish. They didn't set out to doom the shuttle to its fate, but that's exactly what they did. NASA managers were under a great deal of pressure to increase the launch rate in an effort to prove the shuttle had progressed from "test" to "operational" phases. These managers were experts in their fields and were confident each design waiver was the right decision. As their experience with waiving criteria closer and closer to the edge of safety accumulated, their confidence increased. Professor Vaughn calls this the "Culture of Production," as opposed to the engineering culture we all assumed ruled the roost at NASA.

- We examine engineering as a craft and engineering as a bureaucratic profession in order to later demonstrate how these two aspects of engineering culture contributed to the persistence of the SRB workgroup's cultural construction of risk. [pg. 200]

- The public is deceived by a myth that the production of scientific and technical knowledge is precise, objective, and rule-following. Engineers, in this view, are "the practitioners of science and purveyors of rationality." [pg. 200]

- When technical systems fail, however, outside investigators consistently find an engineering world characterized by ambiguity, disagreement, deviation from design specifications and operating standards, and ad hoc rule making. [pg. 200]

- Rather than being retained as consultants, engineers are typically hired as staff in technical production systems, situated in a workplace organized by the principles of capitalism and bureaucratic hierarchy. [ . . . ] Their creative work is bracketed by program decisions made outside (and above) the lab. [pg. 204]

- This pure technical culture was the original source of the NASA self-image that after the Challenger tragedy was referred to as "can do." "Can do" was shorthand for "Give us a challenge and we can accomplish it." [pg. 209]

- Top space agency administrators shifted their attention from technical barriers to space exploration to external barriers to organizational survival. [pg. 212]

- [ . . . ] the work group's behavior demonstrated a belief in the legitimacy of bureaucratic authority relations and conformity to rules a s well as the taken-for-granted nature of cost, schedule, and safety satisficing.

Pilots in the "expert" class are in remarkably similar circumstances. They are quite often under extreme pressure to minimize costs while expanding mission capabilities. While spending less and less on maintenance, training, and operating costs they were expected to fly longer distances and longer duty days. Skipping maintenance checks, training events, and checklist steps were at first approached carefully with considerable thought and consideration. Formal waivers may have been instituted in an effort to do it "just right." Before too long the envelope of what was considered a deviation and what was just "normal operating practices" started to merge. These decisions are rarely black and white and plainly labeled as "we are about to deviate from a procedure we once considered sacred." Rather, they are cleverly disguised. In fact, we can tell ourselves the deviation is for the good of the mission; we can tell ourselves we are actually being safer.

I was once in a flight department that had a strict 5,000' minimum runway length and for good reason. The aircraft's normal landing distance was well within this if properly flown and if the runway way dry. When our new CEO made it clear he wanted to start flying into Hilton Head Island Airport, SC (KHXD) with a runway only 4,300' in length, the rank and file pilot answer was to suggest using Savannah International Airport, GA (KSAV), only 26 nautical miles away. Our chief pilot decided we would allow the shorter runway only if flown by a highly experienced pilot with more than 1,000 hours in type, on a VMC day, provided the runway was dry. As we started running out of such qualified pilots the experience requirement was dropped. Before too long any pilot could be assigned the trip and the dry runway restriction was removed. After my first trip there I made it clear I would never go back and soon every pilot in the flight department except the chief pilot voted likewise. The chief pilot was fired (for other reasons) and his replacement took Hilton Head off our list of acceptable airports. The CEO said he was okay with this, but within a year sold the company.

More about this: The Art of Saying the Word "No".

A common problem in all types of professional aviation can be called "target fixation" or "losing the forest for the trees." We tend to become so focused on getting the job done we can lose sight of the need to do so safely.

- Poor Priorities Cure (self-administered) — One way to keep a perspective of the organization's overall goal (i.e., move people from Point A to Point B), is to always have in mind a back up plan (arrange charter transportation in the event of a maintenance problem or ground transportation in the event of weather below minimums.) By routinely briefing alternate plans, having to enact the alternate plan may not seem too extreme a measure.

- Poor Priorities Cure (administered by leadership) — A poorly kept secret in many aviation circles is that the "safety first" motto is often quoted, rarely enforced. Managerial actions, such as frequent duty day waivers and calls to "hurry up" can undo any spoken assurances. The cure, of course, is to live the "safety first" motto every day.

Issue: Over Confidence

A highly trained, highly experienced, and highly praised pilot is more likely to deviate from SOPs than a novice because of a high level of confidence. Here too there are parallels with the space program.

Implied in the term "expert" is some technical skill, gained either by experience, by professional training, or by both, that differentiates the professional from the lay assessment of risk. Also implied is that professionalism will somehow result in a more "objective" assessment than that of the amateur. But professional training is not a control against the imposition of particularistic world views on the interpretation of information. To the contrary, the consequence of professional training and experience is itself a particularistic world view comprising certain assumptions, expectations, and experiences that become integrated with the person's sense of the world. The result is that highly trained individuals, their scientific and bureaucratic procedures giving them false confidence in their own objectivity, can have their interpretation of information framed in subtle, powerful, and often unacknowledged ways.

Source: Vaughn, pg. 58.

- Over Confidence Cure (self-administered) — With experience comes confidence and, in some cases, over confidence. It is important to realize that even the best pilots make mistakes and you can learn that through empathetic study of accident case studies. Study these with the realization that the pilots in question did not set out to crash their aircraft, but they were met by circumstances that you could easily one day have to confront. You should also realize that your actions set the tone for others; and these less experienced pilots will be tempted to mimic your actions without the same base of experience to rely upon. There are many examples of pilots granted the title of "test pilot" while flying new production aircraft mishandling problems that a rookie crew could have survived with better results. The experienced pilot believes his or her skill can handle compound problems and other adverse conditions, while the newer pilot is likely to be more cautions.

- Over Confidence Cure (administered by leadership) — Pilots tend to be confident by design; it may be difficult to detect unwarranted confidence by observing the pilot's actions. A better indicator may be other metrics, such as the usual flying time between common city pairs or operating costs. If these metrics improve for some unknown reason, it could be some pilots are cutting corners.

Example: Gulfstream G550 N535GA.

Issue: Complacency

Pilots who become comfortable with their day-to-day cockpit existence there is a temptation to stop reading checklists and rely on memory, to stop taking precautions that never seemed necessary before, to start taking the "easy way" over the "right way." Highly experienced pilots and pilots in managerial positions are at greater risk because they are less likely to receive needed oversight.

More about this: Complacency.

- Complacency Cure (self-administered) — The best preventative measure for complacency is to keep motivated to learn and further enhance your value as a pilot. This involves regular study of your current aircraft and operation, augmented by research into future aircraft and operations. Keep engaged with peers and other sources of aviation information. If you are the known source in your peer group, assume to role of mentor; that role will require you really dive into the books to ensure your lessons are valid.

- Complacency Cure (administered by leadership) — Deviancy is encouraged when there is a low chance of being caught, so regular evaluations are needed. Unfortunately, most of the checks offered my major training vendors do little to enforce SOPs, and these are in simulators where the emphasis tends to be on emergency procedures, not normal day-to-day operations. Having an outside auditor examine the flight department can ensure the correct SOPs are in place. Inviting an experienced outside pilot observe crews on operational trips can help identify weak points and encourage tighter compliance with SOPs.

More about this: Line Operation Observations.

7

Concluding thoughts

The normalization of deviance is like a cancer, both on the individual basis and for the group as a whole. The individual is unlikely to realize he or she has been inflicted until it is too late. At that point the individual believes the deviant behavior to be normal. The cancer metastasizes quickly and unless the group has a strong leader and a strong culture grounded in standard operating procedures, the entire group is at risk. I've seen this first hand in the military and as a civilian. In both cases the experience level was very high and such behavior was unexpected. But in both cases one person can make a difference.

More about my military experience with the normalization of deviance here: Flight Lessons 3: Experience.

References

(Source material)

"Costa Concordia: How the Disaster Unfolded," sky News, Monday 04 July 2016, http://news.sky.com/story/costa-concordia-how-the-disaster-unfolded-10371938

NASA Teleconference, January 27, 1986, from Vaughn, pg. 295.

NTSB Accident Docket, ERA14MA271

NTSB Aircraft Accident Report, AAR-15/03, Runway Overrun During Rejected Takeoff Gulfstream Aerospace Corporation GIV, N121JM, Bedford, Massachusetts, May 31, 2014

Report to the Presidential Commission on the Space Shuttle Challenger Accident, June 6th, 1986, Washington, D.C.

Vaughn, Dianne, The Challenger Launch Decision: Risky Technology, Culture, and Deviance at NASA, The University of Chicago Press, Chicago and London, 1996.