I get a lot of email, as you can imagine. The last few weeks have seen a spike in that. And just about all of that spike is on one subject: 5G. As many of you know, I also write for Aviation Week and Business & Commercial Aviation magazines. One of my colleagues there wrote that the 5G and radio altimeter controversy is nothing to worry about, because radio altimeters are just advisory! This individual has had an illustrious career and he is quite smart. But on this subject, his experience and knowledge is dated. When asked, I agree that it is something to worry about. But understanding the problem requires a bit of history. Let's get started.

— James Albright

Updated:

2022-01-25

First a quick note. The term "radio altimeter" and "radar altimeter" refer to the same thing. A radar uses radio waves.

1

1G

Prior to the adoption of cellular networks, early mobile communications systems used a single high power base station to cover a wide area. The problem with this approach was that due to the finite amount of available spectrum, only a small number of users could be supported and when they dropped out of range they lost their connection.

The beauty of the ‘cellular’ approach is that vastly more users can be accommodated because spectrum is re-used across a given area. This means several phones can use the same frequency channel as long as they are connected to different “cells” (i.e. base stations) that are sufficiently far apart. It also means that when a user drops out of range of one base station their call can be handed over to another allowing them to continue the conversation.

Source: GSMA, p.27

Who invented the cell phone? Some say it was Motorola in the U.S. in 1978. What about the first network?

The first generation of mobile networks – or 1G as they were retroactively dubbed when the next generation was introduced – was launched by Nippon Telegraph and Telephone (NTT) in Tokyo in 1979. By 1984, NTT had rolled out 1G to cover the whole of Japan.

In 1983, the US approved the first 1G operations and the Motorola’s DynaTAC became one of the first ‘mobile’ phones to see widespread use stateside. Other countries such as Canada and the UK rolled out their own 1G networks a few years later.

However, 1G technology suffered from a number of drawbacks. Coverage was poor and sound quality was low. There was no roaming support between various operators and, as different systems operated on different frequency ranges, there was no compatibility between systems. Worse of all, calls weren’t encrypted, so anyone with a radio scanner could drop in on a call.

Source: Haverans

These phones used analog signals, meaning the sound was turned into either varying amplitudes or frequencies on top of a signal. Every signal between a cellphone and the cellphone tower consumed a bit of the frequency that no one else could use simultaneously. The frequencies assigned to cellphones quickly became congested.

A variety of different types of 1G mobile network technologies rapidly grew up around the world including:

- Advanced Mobile Phone System (AMPS) – which mostly used the 800 MHz band

- Nordic Telecommunication System (NMTS) – which mostly used 450 MHz & 900 MHz

- Total Access Communications (TACS) – which mostly used 900 MHz

However these different, and incompatible, analogue systems meant using a phone abroad was impossible and they soon became oversubscribed and prone to cloning and eavesdropping.

Source: GSMA, p. 29

Another issue with analog signals is that they tended to degrade with distance and were subject to losses when amplified.

At its best, 1G was capable of transferring 2.4 thousands of bits per second, or 2.4 kbps.

2

2G

The second generation of mobile networks, or 2G, was launched under the GSM standard in Finland in 1991. For the first time, calls could be encrypted and digital voice calls were significantly clearer with less static and background crackling.

Source: Haverans

The Global System for Mobile Communications (GSM) was developed specifically for 2G digital networks. At first, GSM simply turned analog signals, such as your voice, into digital data. This solved many problems because the signal didn't degrade with distance and was less subject to noise and other distortion. It also made it possible to send other kinds of data, such as text.

But 2G was about much more than telecommunications; it helped lay the groundwork for nothing short of a cultural revolution. For the first time, people could send text messages (SMS), picture messages, and multimedia messages (MMS) on their phones. The analog past of 1G gave way to the digital future presented by 2G. This led to mass-adoption by consumers and businesses alike on a scale never before seen.

Although 2G’s transfer speeds were initially only around 9.6 kbit/s, operators rushed to invest in new infrastructure such as mobile cell towers. By the end of the era, speeds of 40 kbit/s were achievable and EDGE connections offered speeds of up to 500 kbit/s. Despite relatively sluggish speeds, 2G revolutionized the business landscape and changed the world forever.

Source: Haverans

Digital data consumes less bandwidth but on its own still suffers from the fact it can monopolize a portion of the allotted frequencies. If you made a call, for example, a slice of the allotted frequency had to be carved out for you and became unavailable for other users until you were done or otherwise disconnected. The solution was to use "packets" of data. Your voice call, text message, or any other type of data is broken down into smaller packets of information. Each packet includes a "decoder" known as header. The header tells the system where the packet needs to go and once it gets there, it tells the receiver how to combine all the packets back to their original state, such as your phone call. Early systems were known as the General Packet Radio Service (GPRS) and Enhanced Data Rates for GSM Evolution (EDGE).

As GSM was designed by the mobile community to be fully interoperable, the same devices and network equipment could be sold globally, helping to bring down prices and allowing consumers to roam on foreign networks for the first time. These networks largely operated in the 900 MHz, 1,800 MHz, 850 MHz and 1,900 MHz bands.

Source: GSMA, p. 31

These frequencies were for the most part previously unused and there wasn't any real controversy to devoting them to cell phones. The introduction of digital packets greatly increased capacity and phone call quality. The addition of text and other data was a bonus.

3

3G

3G was launched by NTT DoCoMo in 2001 and aimed to standardize the network protocol used by vendors. This meant that users could access data from any location in the world as the ‘data packets’ that drive web connectivity were standardized. This made international roaming services a real possibility for the first time.

3G’s increased data transfer capabilities (4 times faster than 2G) also led to the rise of new services such as video conferencing, video streaming and voice over IP (such as Skype). In 2002, the Blackberry was launched, and many of its powerful features were made possible by 3G connectivity.

The twilight era of 3G saw the launch of the iPhone in 2007, meaning that its network capability was about to be stretched like never before.

Source: Haverans

The vast majority of networks globally used Wideband CDMA (WCDMA) technology, which was the natural evolution from 2G GSM systems. However, a number of operators used the alternative CDMA 2000 system and a China-specific TD-SCDMA version was also developed. Most 3G networks operate in the 800 MHz, 850 MHz, 900 MHz, 1,700 MHz, 1,900 MHz and 2,100 MHz bands.

In 2005, the first network was upgraded to support High Speed Packet Access (HSPA) which allowed download speeds of up to 14.4 Mbps and became known as 3.5G. Since then further upgrades including HSPA+ accelerated speeds up to 42 Mbps and beyond.

Source: GSMA, p. 33

4

4G

4G was first deployed in Stockholm, Sweden and Oslo, Norway in 2009 as the Long Term Evolution (LTE) 4G standard. It was subsequently introduced throughout the world and made high-quality video streaming a reality for millions of consumers. 4G offers fast mobile web access (up to 1 gigabit per second for stationary users) which facilitates gaming services, HD videos and HQ video conferencing.

The catch was that while transitioning from 2G to 3G was as simple as switching SIM cards, mobile devices needed to be specifically designed to support 4G. This helped device manufacturers scale their profits dramatically by introducing new 4G-ready handsets and was one factor behind Apple’s rise to become the world’s first trillion dollar company.

While 4G is the current standard around the globe, some regions are plagued by network patchiness and have low 4G LTE penetration. According to Ogury, a mobile data platform, UK residents can only access 4G networks 53 percent of the time, for example.

Source: Haverans

4G introduced Orthogonal Frequency Division Multiplexing (OFDM), which made transmissions more resilient against attenuation and interference. OFDM also allowed multiple signals to be combined and the broken up again, allowing more data on the same frequencies. 4G also took up some of the frequencies once devoted to older analog television stations. But we were still running out of bandwidth, the search was on for more usable frequencies.

Each new cellular generation uses wider channel bandwidths as well as improved radio technology to drive faster connection speeds, requiring the use of increasing amounts of spectrum.

Source: GSMA, p. 34

5

Running out of spectrum

Radio spectrum, GSMA, p. 12

Measuring the electromagnetic spectrum

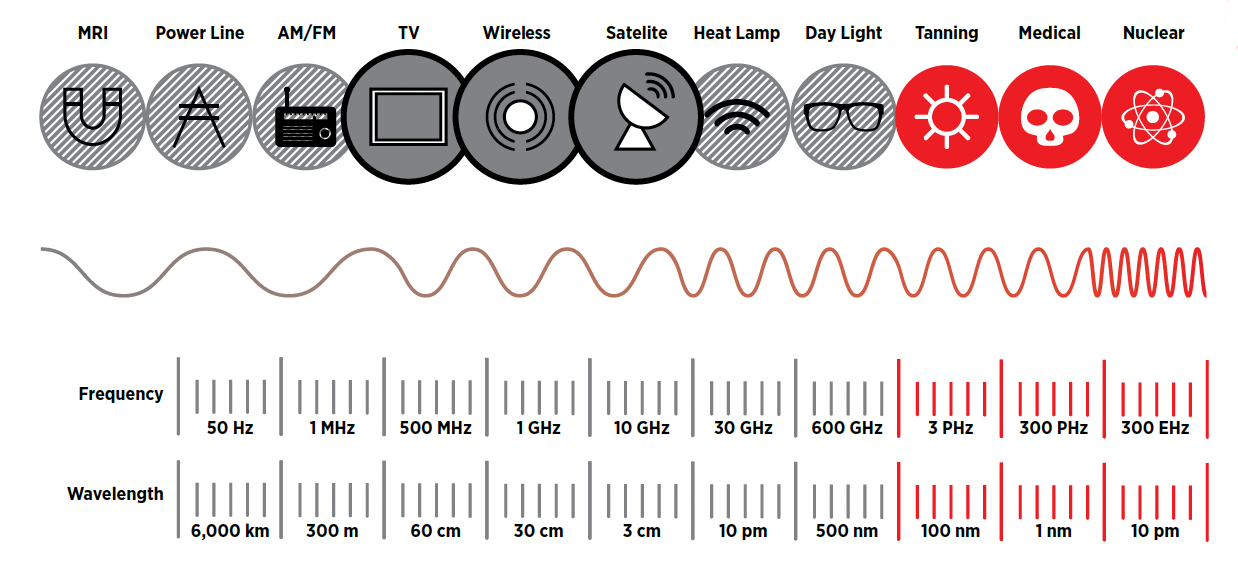

Radio waves constitute just one portion of the entire electromagnetic spectrum, which also includes a variety of other waves including X-ray waves, infrared waves and light waves. The electromagnetic spectrum is divided according to the frequency of these waves, which are measured in Hertz (i.e. waves per second). Radio waves are typically referred to in terms of:

- kilohertz (or kHz), a thousand waves per second

- megahertz (or MHz), a million waves per second

- gigahertz (or GHz), a billion of waves per second

The radio spectrum ranges from low frequency waves at around 10 kHz up to high frequency waves at 100 GHz. In terms of wavelength, the low frequencies are about 30 km long and the high frequencies are about 3 mm.

Source: GSMA, p. 12

Frequency bands

Radio spectrum is divided into frequency bands, which are then allocated to certain services. For example, in Europe, the Middle East and Africa, the FM radio band is used for broadcast radio services and operates from 87.5 MHz-108 MHz. The band is subdivided into channels that are used for a particular transmission, so the individual channels in the FM band represent the separate broadcast radio stations.

The wider the frequency bands and channels, the more information that can be passed through them. This move towards wider — or broader — frequency bands that can carry larger amounts of information is one of the most important trends in telecommunications and directly relates to what we refer to as a ‘broadband’ connection.

In the same way that wider roads mean you can add more lanes to support more vehicle traffic, wider bands mean you can add more channels to support more data traffic.

Source: GSMA, p. 14

Running out

In 1990 there were around 12 million mobile subscriptions worldwide and no data services. In 2015, the number of mobile subscriptions surpassed 7.6 billion (GSMA Mobile Economy 2016) with the amount of date on networks reaching 1,577 exabytes per month by the end of 2015 — the equivalent of 1 trillion mp3 files or 425 million hours of streaming HD video.

Source: GSMA, p. 9

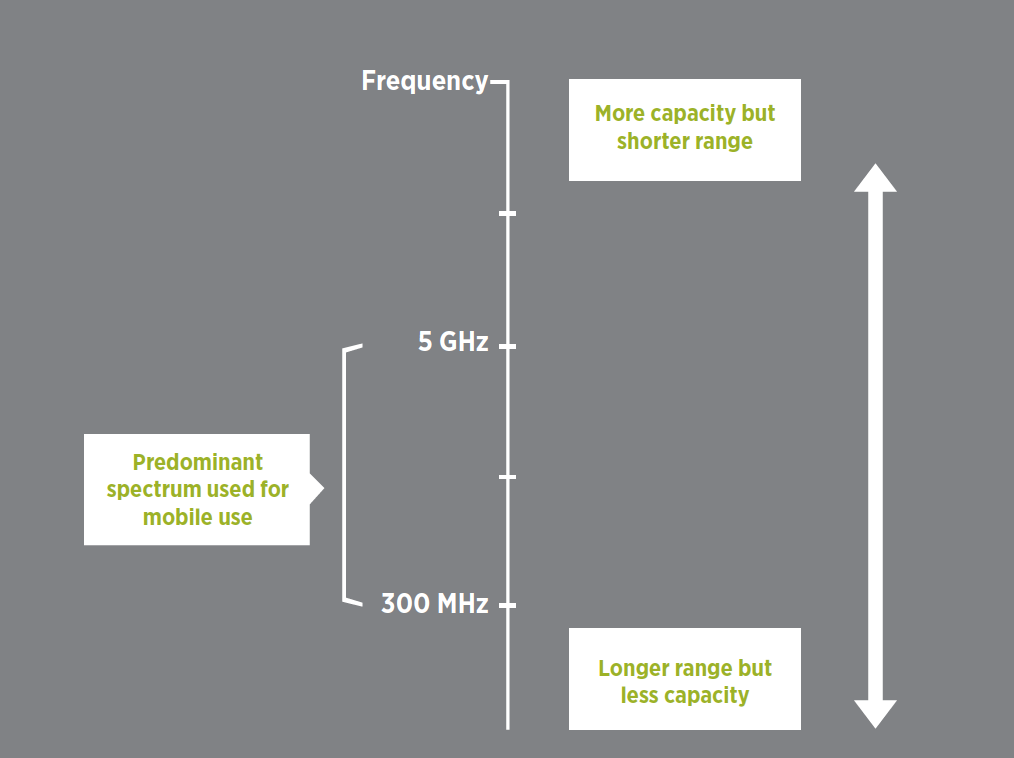

Even with the increased number of available frequencies used by 4G systems and the improved data handling, it became apparent that the global appetite for bandwidth required more frequencies. It appeared we have run out of the lower frequencies, so what about higher frequencies? It appears it is easier to pack more information in a higher frequency, but there are downsides of the higher end.

6

The Good and Bad of Higher Frequencies

Low versus high frequency bands, GSMA, p. 12

Lower versus higher frequency bands

Lower frequency bands provide wider coverage because they can penetrate objects effectively and thus travel further, including inside buildings. However, they tend to have relatively poor capacity capabilities because this spectrum is in limited supply so only narrow bands tend to be available.

Contrastingly, higher frequency bands don’t provide as good coverage as the signals are weakened or even stopped by obstacles such as buildings. However, they tend to have greater capacity because there is a larger supply of high frequency spectrum making it easier to create broad frequency bands, allowing more information to be carried.

Source: GSMA, p. 15

We are reaching the limits of cramming more and more data into these frequencies so we are looking for more frequencies to use. The most recent push has been to use High Frequency Millimeter Waves, which have previously been shunned since they don’t travel as far and are more likely to be interrupted by obstructions such as mountains or even buildings. (They almost always require a direct line of sight.) So you need a lot of cell towers. This is 5G.

You can steer 5G beams to effectively target each user. Massive Multiple Input Multiple Output (Massive MIMO) allows 5G to aim directly to the user so the same frequency can be used by the same tower to different users in different directions. But the limitations of range and the fact the signal can be interrupted means you need a lot of cell towers. Studies estimate that to bring 100 Mbps to 72% of the U.S. population and 1.0 Gbps to 55% of the U.S. population, we will need 13 million utility pole mounted base stations. This will cost $400B.

The higher frequencies used by 5G can carry more data, meaning the speed can reach 1,800 Mbps.

7

Trouble

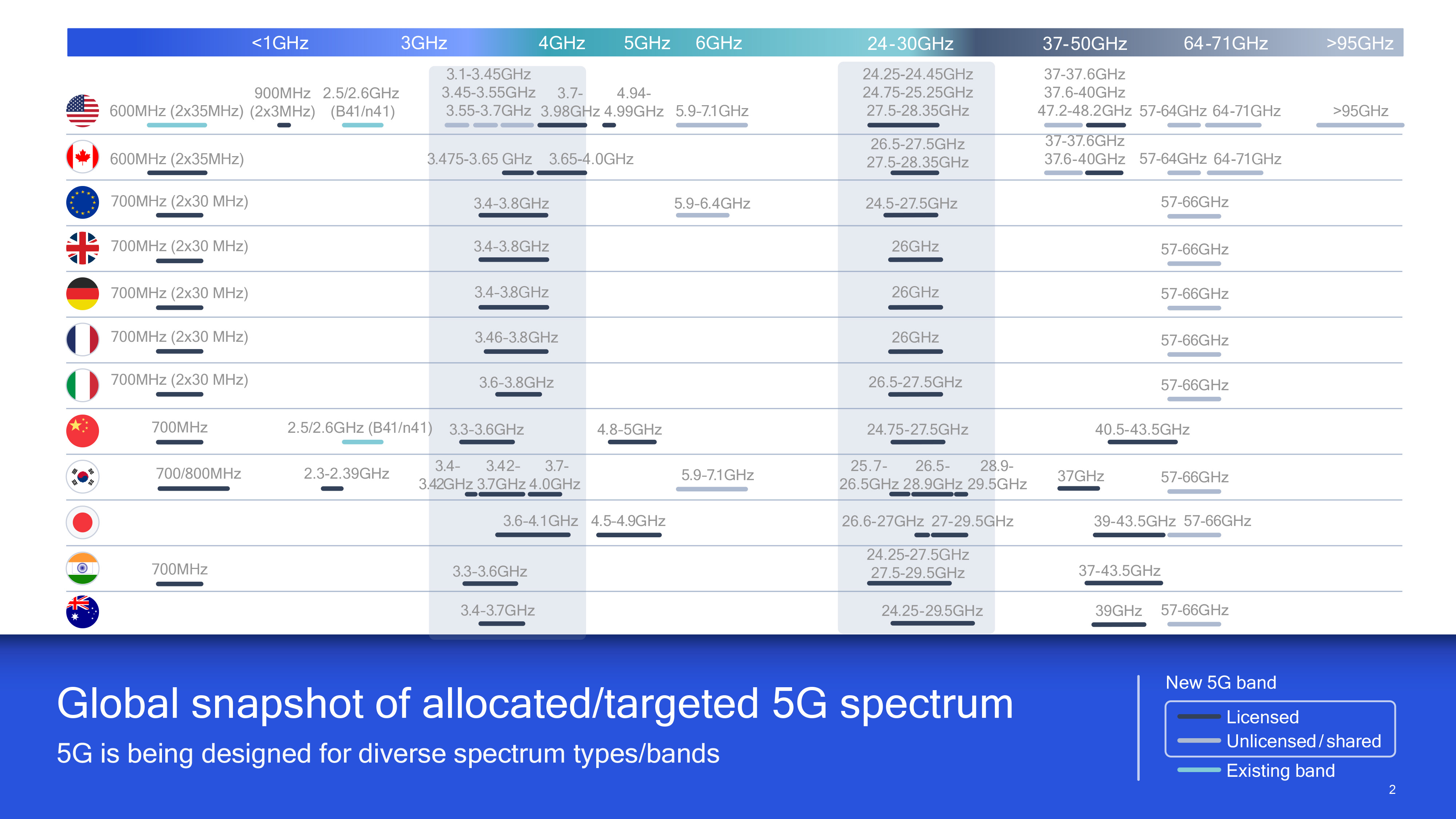

You may have heard there is a problem with 5G for aviators in the United States whereas the rest of the world is fine. This isn't exactly true. If you look at the frequencies allocated for 5G worldwide, you might notice something peculiar:

Global snapshot of allocated/targeted 5G spectrum, Qualcomm

Radio altimeters operate at frequencies around 4.2 GHz. Notice that in most parts of the world, the lower frequency 5G bands go no higher than 3.8 GHz, providing a 0.4 GHz (400 megahertz) buffer. But in the United States, the band in question ends at 3.98 GHz, reducing our margin to 0.22 GHz (220 megahertz), about half. Two other countries shave the margin even more thinly.

8

Radio Altimeters

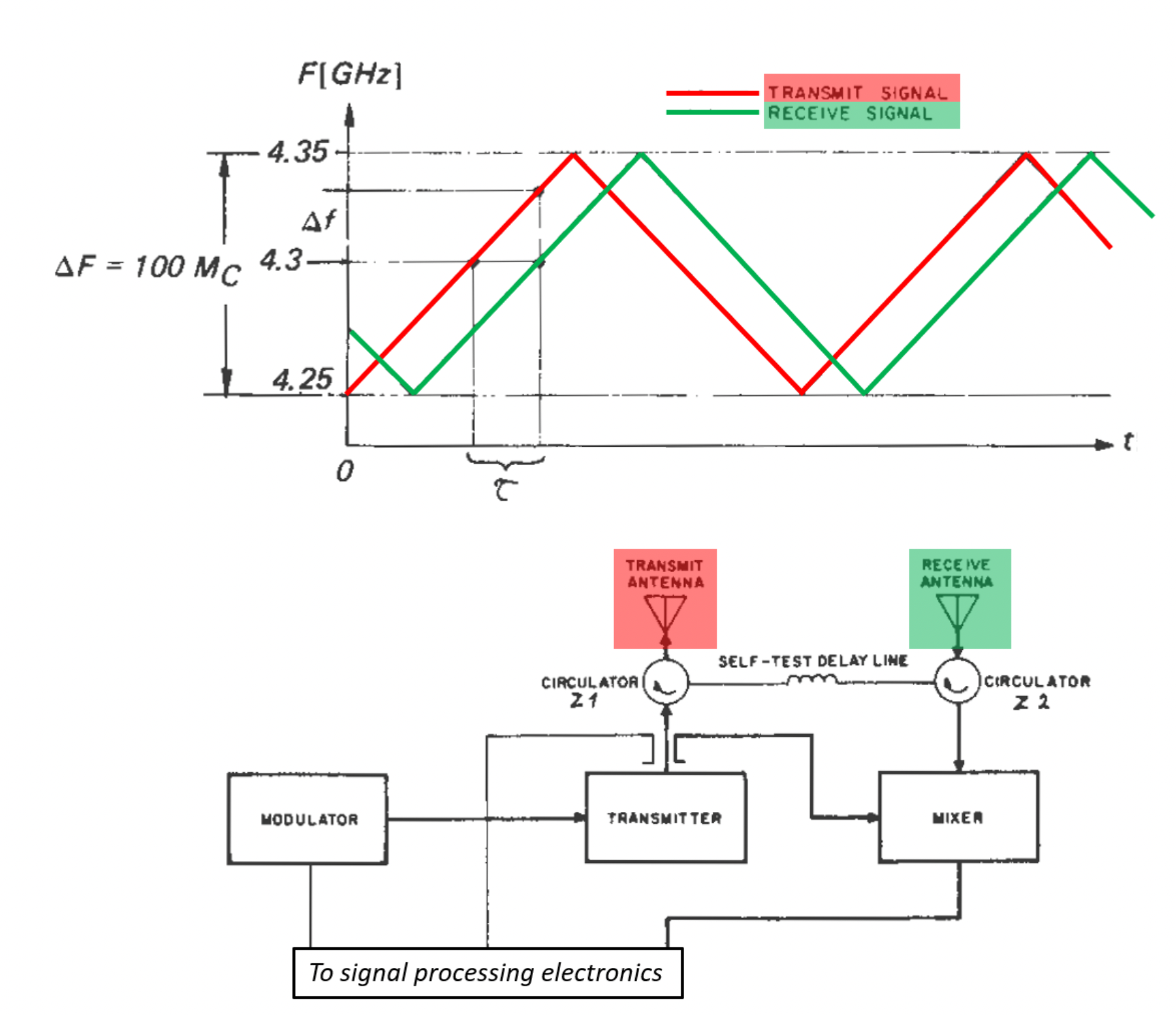

Radio altimeter basic principles, Engineering Pilot

Most of the radar altimeters used in aviation employ the so-called Frequency-Modulated Continuous Wave (FMCW) principle. A carrier signal is modulated to produce a “sweep” over a given frequency range. This signal is transmitted via a transmission antenna (red in [the] figure). After propagation, the reflected signal is received via another dedicated receiver antenna (green in [the] figure). Part of the transmit signal is coupled into the receiver path, where it is mixed with the received signal. This permits the determination of the frequency shift, which is representative for the propagation time and thus the distance traveled. The principle is depicted graphically in [the] figure.

The electronics involved are often analog and thus quite sensitive to interference. Only few, more modern designs are based on digital technology and are thus more tolerant to disturbances.

How is this possible? Well, here is the problem:

The Minimum Operational Performance Standard (MOPS) RTCA DO-155 in its legacy form does not mandate specific interference protection.

The good news is that the typical radar altimeter installation allows for a Line-Replaceable-Unit (LRU) upgrade, so the wiring and antennae can remain untouched in many cases, should an upgrade become necessary.

Wait a minute – what about HIRF tolerance?

Those involved in airworthiness certification might ask the following question: How come, the existing High Intensity Radiation Field (HIRF) guidelines do not provide sufficient protection? Aircraft can be exposed to a variety of HIRF environments, and the industry has developed standard “HIRF” environments years ago to account for radiation exposure, such as ATC radars, weather radars and other transmitters. Some of these emit much more power than a 5G antenna will. Here is the catch:

The HIRF environments used for current airworthiness certification were calculated based on known transmission sources at the time and assumed certain slant ranges. 5G was simply not there.

The consequence of this is that the electric field strength levels in the current HIRF environments might not ensure sufficient protection against 5G interference.

What the industry did: RTCA special committee SC-239.

After it became known that the 5G communication system will use part of the spectrum quite close to the radar altimeter band, the Radio Technical Commission for Aeronautics (RTCA) established a task force to investigate the potential interference risk [2]. The results of this investigation were published in a special report [2]. The applied method involved gathering data from mobile communication companies and radar altimeter manufacturers. The interference tolerance of typical radar altimeters was determined using bench testing. Then, a simulation was carried out, to determine the typical interference levels “seen” by an operational aircraft in a 5G-environment. The combination of these two data sources allowed to determine, where the interference margin is insufficient.

The report determined that significant interference has to be expected, should the 5G communication systems be rolled-out without precautions.

Source: Engineering Pilot

The Engineeringpilot does an excellent job of outlining the 5G versus radio altimeter issue and cites his references. I've deleted those references for readability but encourage you to read the linked post for more information: www.engineeringpilot.com.

Do we have a problem here? Here is what the FAA has to say:

The Federal Aviation Administration (FAA) adopted new ADs (AD 2021-23-12 and AD 2021-23-13) for all transport and commuter category airplanes, and all helicopters, equipped with a radio altimeter. The radio altimeter is an important aircraft instrument, and its intended function is to provide direct height-above-terrain/water information to a variety of aircraft systems. Commercial aviation radio altimeters operate in the 4.2-4.4 GHz band, which is separated by 220 megahertz from the C-Band telecommunication systems in the 3.7-3.98 GHz band.2 These ADs were prompted by a determination that radio altimeters cannot be relied upon to perform their intended function if they experience interference. The ADs require revisions to the limitations section of the existing aircraft/airplane flight manual or rotorcraft flight manual, as applicable, to incorporate limitations prohibiting certain operations requiring radio altimeter data when in the presence of 5G C-Band interference in areas and at airports identified by NOTAMs. The FAA issued these ADs to address the unsafe condition on these products.

The radio altimeter is more precise than a barometric altimeter and for that reason is used where aircraft height over the ground needs to be precisely measured, such as during autoland or other low altitude operations. The receiver on the radio altimeter is typically highly accurate, however it may deliver erroneous results in the presence of out-of-band radio frequency emissions from other frequency bands. The radio altimeter must detect faint signals reflected off the ground to measure altitude, in a manner similar to radar. Out-of-band signals could significantly degrade radio altimeter functions if the altimeter is unable to sufficiently reject those signals.

While the FAA issued ADs 2021-23-12 and 2021-23-13 to address operations immediately at risk (e.g., those requiring a radio altimeter to land in low visibility conditions), a wide range of other automated safety systems rely on radio altimeter data whose proper function may also be affected. Anomalous (missing or erroneous) radio altimeter inputs could cause these other systems to operate in an unexpected way during any phase of flight - most critically during takeoff, approach, and landing phases. These anomalous inputs may not be detected by the pilot in time to maintain continued safe flight and landing. Operators and pilots should be aware of aircraft systems that integrate the radio altimeter, and should follow all Standard Operating Procedures related to aircraft safety system aural warnings/alerts.

These systems include, but are not limited to:

- Class A Terrain Awareness Warning Systems (TAWS-A)

- Enhanced Ground Proximity Warning Systems (EGPWS)

- Traffic Alert and Collision Avoidance Systems (TCAS II)

- Take-off guidance systems

- Flight Control (control surface)

- Tail strike prevention systems

- Windshear detection systems

- Envelope Protection Systems

- Altitude safety callouts/alerts

- Autothrottle

- Thrust reversers

- Flight Director

- Primary Flight Display of height above ground

- Alert/warning or alert/warning inhibit

- Stick pusher / stick shaker

- Engine and wing anti-ice systems

- Automatic Flight Guidance and Control Systems (AFGCS)

Source: SAFO 21007

Allow me to translate. Is 5G a problem for radio altimeters? We don't know. But it could be, so be careful! The FAA will issue NOTAMS for airspace, aerodromes, instrument approach procedures, and for special instrument approach procedures. Looking at an example aerodrome NOTAM can provide an idea of what to expect:

BDL AD AP RDO ALTIMETER UNREL. AUTOLAND, HUD TO TOUCHDOWN, ENHANCED FLT VISION SYSTEMS TO TOUCHDOWN NOT AUTHORIZED EXC FOR ACFT USING APPROVED ALTERNATIVE METHODS OF COMPLIANCE DUE TO 5G C-BAND INTERFERENCE PLUS SEE AIRWORTHINESS DIRECTIVE 2021-23-12

10

Conclusions?

Do we have a problem?

I don't know. We know that in some countries the margin between 5G and our radio altimeters has been cut in half but we don't know if that really causes radio altimeter interference because it wasn't a required test item back when the radio altimeter standard was developed. I haven't heard of any actual cases. (If you have, please let me know.) What we do know is that some aircraft are more tied to the radio altimeter than others.

A well known writer for a well known aviation publication says it is nothing to worry about. He has a long resume that includes the FAA and the NTSB so he takes the "What me? Worry?" attitude.

I think this individual must have limited experience with modern aircraft. I've spent the last two years flying what very well may be the most advanced cockpit ever certified. Beside the long list of things to worry about listed in the previous section, we also have a Crew Alerting System that filters what it lets the pilot know, depending on the aircraft's speed and altitude. Like what? Let's say you have an engine fire. Until you get to 400 feet radio altimeter height, the system withholds that information from you. Do I worry about 5G interference? Yes I do. Looks like I picked a fine time to retire.

My advice: be mindful of any system with ties to the radio altimeter and insist that all crew and passengers have their mobile devices in airplane mode.

References

(Source material)

Engineeringpilot.com, 5G vs. Radar altimeter: a déjà vu, 19 January 2022

Introducing radio spectrum, Groupe Speciale Mobile (GSM) Association, February 2017.

Haverans, Reinhardt, From 1G to 5G: A Brief History of the Evolution of Mobile Standards, 27 May 2021.

Qualcommk Global update on spectrum for 4G & 5G, December 2020

Safety Alert for Operators (SAFO) 21007, Risk of Potential Adverse Effects on Radio Altimeters when Operating in the Presence of 5G C-Band Interference, 12/23/21